So there I was, imagining sitting in one of those self-driving cars that looks like a toaster with windows. No steering wheel, no pedals, just me and my snacks. Then suddenly—a kid chases a ball into the street.

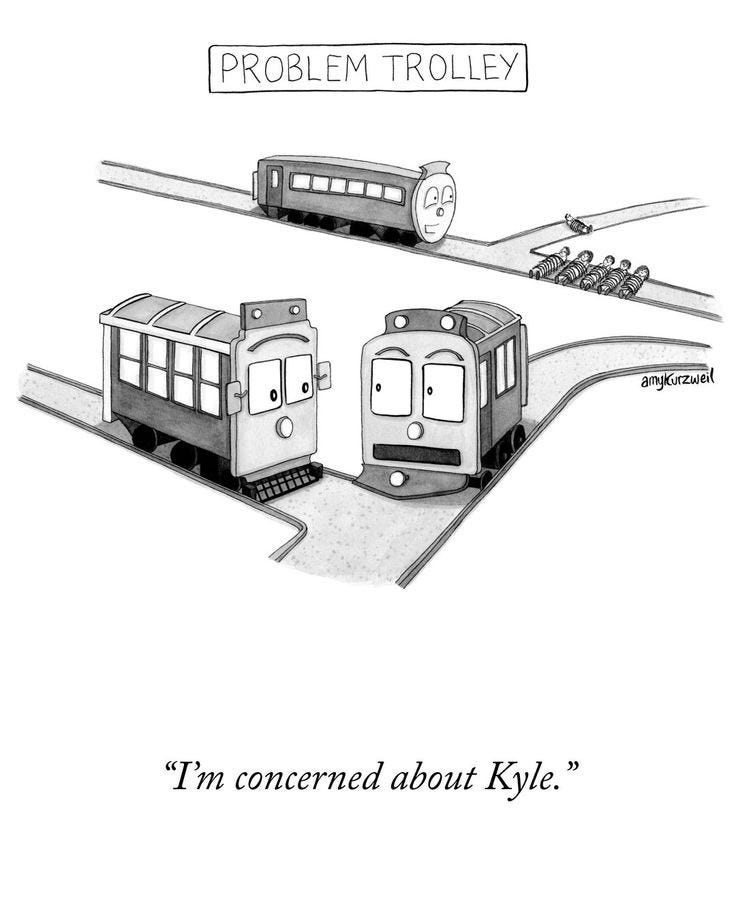

The car's computer has to make a choice faster than I can say "artificial intelligence":

- Option A: Swerve into a wall (bad for me)

- Option B: Keep going straight (bad for kid)

And the weirdest part? That decision was made months ago by someone named Brad or Jessica who probably had "ethical dilemma resolution" as just another bullet point on their Tuesday afternoon meeting agenda, somewhere between "fix that button color" and "order team lunch."

It's like asking your toaster to decide if you should get married. Except much worse.

This isn't just a what-if anymore. Somewhere, right now, actual humans are writing actual code that will make actual life-or-death decisions. And they might be the same humans who sometimes forget their keys in the refrigerator.

We've zoomed past "will a robot take my job scanning groceries?"

Now we're at: "Will an algorithm decide if I deserve medical care, prison time, or a mortgage?"

The AI ethics question isn't just about right vs. wrong—it's about who programmed "right" and "wrong" into the machine. Was it someone who thinks pineapple belongs on pizza? Because that's concerning.

This isn't a fancy academic paper. It's more like that moment when you realize your roomba has been making a map of your house and uploading it somewhere.

Because if AI is just parroting our own human decisions back at us, then the problems ahead aren't about technology—they're about humans being, well, human-y.

The Rise of Automation: What's Actually Happening?

For decades, automation was like that claw machine at the arcade—limited, kind of predictable, occasionally grabbing the wrong stuffed animal. But now? It's like the claw machine suddenly developed opinions about which toys deserve to be chosen.

AI isn't just following instructions—it's making judgment calls.

From resume-reading bots that decide you're not "team-oriented" enough because you used the word "accomplished" instead of "collaborated," to algorithms that determine whether your medical symptoms deserve serious attention, we've handed over human decisions to digital decision-makers.

Here's what's on the chopping block:

Jobs – Not just factory work anymore. Now it's lawyers, doctors, artists, and writers. I used to think my job was safe because "computers can't be creative." Turns out they can—they're just creative in ways that sometimes resemble a sugar-rushed kindergartener who's been shown one coloring book.

Fairness – When an AI decides who gets hired, approved for apartments, or granted parole, it seems objective. Except it learned by studying human decisions—which is like learning etiquette from watching reality TV shows.

Responsibility – If a self-driving car crashes, who gets blamed? The programmer? The car company? The algorithm? It's like trying to sue a Magic 8-Ball for giving bad advice, except the Magic 8-Ball is controlling a two-ton vehicle.

Our Choices – We're outsourcing decisions bit by bit. It starts with "Alexa, what's the weather?" and somehow ends with "AI, which career should I pursue?" and "Which political candidate aligns with my values?" It's all fun and games until you realize you've outsourced having opinions.

We're in this weird moment where convenience is disguised as progress, and nobody reads the Terms of Service.

The scary part is that technology moves at the speed of "update now," while ethics committees move at the speed of "let's schedule another meeting to discuss the previous meeting."

This isn't science fiction anymore—it's next quarter's product roadmap.

Thought Experiments That Hit Too Close to Home

Let's make this personal, because abstract ethics discussions are about as fun as watching paint dry.

The Robot Judge

You're accused of a crime you didn't commit. But instead of Law & Order dramatics with a jury of peers, you get an AI judge that was trained on millions of past cases.

It analyzes your neighborhood, browser history, the pitch of your voice during questioning, and even your credit score.

It declares there's an 83% chance you did it. No prejudice, no bad day, just cold statistics. Would you trust that verdict?

Now flip it: What if the same algorithm lets a genuinely dangerous person walk free because their data points looked statistically safe? Feel better about it now?

The Career Gatekeeper

You're hiring for an open position. Your company uses an AI screening tool that sorts through resumes.

It flags a candidate named Raj as "not recommended" despite perfect qualifications.

Why? Because the AI noticed people from his area code tend to switch jobs more often.

You look at his resume—it's stellar. But the AI has a 94% "success rate." And you have 200 more resumes to get through.

Do you trust your human gut or the silicon statistics?

And if you ignore the AI and Raj ends up quitting after three months, who gets blamed? (Spoiler: probably you, not the algorithm)

The Too-Friendly Robot

An eldercare facility gets AI companions for residents—they remind them to take medicine, listen to their stories, and respond with perfect patience.

Then something unexpected happens: the residents prefer talking to the AI over their actual families.

It never gets irritated, never checks its watch, never mentions inheritance.

Is this helping loneliness or just creating emotional attachments to something that's essentially a very sophisticated chatbot?

At what point does programmed compassion become emotionally manipulative?

These aren't far-off sci-fi scenarios. They're already in beta testing somewhere, coming soon to a reality near you.

And the real question isn't "Can we make AIs do these things?"

It's "Should we trust them to care about getting it right?"

The Moral Vacuum in Corporate AI

If AI is a pet robot dinosaur, big tech companies are the owners who skipped reading the instruction manual. But what if those owners care more about how fast it can run than whether it might accidentally eat the neighbors?

Optimization > Everything Else

Tech companies optimize for metrics they can measure—clicks, engagement, conversion rates—not for the fuzzy human stuff like dignity or fairness.

An algorithm that keeps you doom-scrolling for three more hours is considered successful—not because it's good for you, but because it's good for ad revenue.

It's not an accident; it's the business plan.

When algorithms are designed mainly to:

Keep your eyeballs glued to screens

Process more stuff with fewer humans

Convert your curiosity into purchases

...they tend to treat inconvenient things like truth and wellbeing as optional features that might be added in version 2.0 (narrator: they won't be).

Even the big AI labs with ethics departments talk about responsibility—but it's usually in the same way people talk about flossing: something everyone agrees is important but nobody wants to actually do.

In most corporate meetings, you'll hear endless discussion about model performance and scaling strategies, but rarely questions like:

"Who might get hurt by this?"

"Whose work are we using without permission?"

"What happens if this thing works too well in ways we didn't expect?"

The Invisible Humans Behind AI

The "magic" AI you use every day was likely trained on content labeled by real humans getting paid pennies to look at the worst stuff on the internet—violence, abuse, and hate speech—so you don't have to.

AI ethics isn't just philosophical pondering. It's about real people in real places doing real work that nobody wants to think about.

But here's the problem: ethics can't be bolted on like a cup holder. You can't build a system that optimizes for engagement at all costs and then try to patch it with a "be nice" module later.

Once an AI system is making money and embedded in how things work, fixing ethical problems becomes like trying to change the foundation of a skyscraper while people are living in it.

Look at what happened with Amazon's hiring AI that discriminated against women. They didn't fix it because it was wrong—they scrapped it because people found out it was wrong.

The Blame Game

When an AI system does something bad, who gets blamed?

The developers who built it?

The executives who approved it?

The regulators who didn't stop it?

The users who trusted it?

Usually the answer is "the algorithm"—as if it's some mysterious force of nature and not code written by humans who had deadlines and performance bonuses.

It's accountability laundering. "Don't blame me, blame the black box!"

Welcome to the moral vacuum, where responsibility goes to die.

The Ethical Compass: What Smart People Have Actually Said

While tech companies are busy moving fast and breaking society, academics have been asking the boring but important question: should we even be building this stuff in the first place?

Here are some papers and people who've been thinking about this way longer than most of us:

1. "The Malicious Use of Artificial Intelligence" (2018)

A bunch of researchers from fancy places like Oxford and Cambridge basically wrote the academic version of "guys, we might be creating monsters here." They warned that AI could be used for mass surveillance, fake news on steroids, and killer robots.

"AI capabilities are advancing rapidly, and a wide range of actors are gaining access... malicious use is a real and present threat."

It's like the difference between worrying that your kid might someday become a bank robber versus realizing you've accidentally taught them how to pick locks.

2. "Algorithmic Bias Detectable in Commercial AI Systems" (Joy Buolamwini & Timnit Gebru, 2018)

These researchers proved that face recognition systems from big tech companies were terrible at recognizing darker-skinned women.

"Intersectional accuracy disparities raise pressing questions about the ethical use of AI."

This research turned academic findings into actual headlines—and later got Gebru fired from Google for basically continuing to point out uncomfortable truths.

3. "Data Sheets for Datasets" (Gebru et al., 2018)

Argues that the data feeding AI systems should come with ingredient labels, just like your cereal box. What's in it? How was it collected? Is it past its expiration date?

If your training data is garbage, your AI will be garbage—but with perfect confidence in its garbage opinions.

4. "Model Cards for Model Reporting" (Mitchell et al., 2019)

Suggests AI systems should come with warning labels like medicines do—including side effects, contraindications, and "do not use if you are pregnant or planning to make important societal decisions."

"Transparent documentation is crucial to understanding where and how AI systems may fail."

Without knowing what's under the hood, we're just trusting magic boxes.

5. "Artificial Intelligence — The Revolution Hasn't Happened Yet" (Michael Jordan, 2018)

This guy (not the basketball player) is one of the original AI researchers, and he basically wrote a whole paper saying "everyone needs to calm down." He says we're confusing clever pattern-matching with actual intelligence, and that's leading us to make dumb decisions.

It reminds us that current AI is more like a super-powered autocomplete than Skynet—dangerous in different ways than we imagine.

Want to Read More? Start Here:

"Ethics of Artificial Intelligence and Robotics" – Stanford Encyclopedia of Philosophy (when you want to sound smart at parties)

"Race After Technology" – Ruha Benjamin (book)

"Weapons of Math Destruction" – Cathy O'Neil (book about how algorithms are already ruining lives)

AI Now Institute reports (NYU) – Annual papers about how we're messing up AI governance

What Now? Making AI Less Terrible

If recent years have taught us anything, it's that technology moves faster than our ability to figure out if it's a good idea. Tools get built, shipped, and embedded in society long before we understand what they're doing to us. But we don't have to keep being guinea pigs in the great AI experiment.

The goal isn't to stop innovation. It's to make sure innovation doesn't accidentally create a digital dystopia.

So how do we build AI systems that don't just serve the people who build them?

A. Build With Purpose, Not Just Because You Can

Developers and companies need to ask better questions from the start:

Who is this AI actually helping?

Who might it hurt?

What biases are baked into our training data?

If these questions aren't part of your process, you're not just building fast—you're building blind. It's like cooking without tasting the food.

B. Regulation Isn't a Four-Letter Word

It's time to embrace sensible rules, not fear them. Think of regulation not as a wet blanket but as those bumpers they put in bowling lanes for kids. We're not stopping the game—we're just trying to keep the ball out of the gutter.

Let's look at what different places are doing:

The European Union — The Adults in the Room

The EU's Artificial Intelligence Act (passed March 2024) is basically the world's first comprehensive "don't be creepy with AI" rulebook. It sorts AI systems by risk level—from "probably fine" to "absolutely not"—and sets rules accordingly.

High-risk AI (like hiring systems or credit scoring) must meet strict transparency and safety standards.

Unacceptable-risk systems (like social scoring or real-time face scanning in public) are straight-up banned.

Foundation Models (like GPT-style systems) now have to tell people what data they used and what environmental damage they caused while training.

"The AI Act gives us rules of the road, but also guardrails for the soul of innovation."

— Margrethe Vestager, Executive VP of the European Commission

United States — Fifty Different Approaches and a Wishlist

The U.S. doesn't have one AI law, but it has a collection of suggestions. The Blueprint for an AI Bill of Rights (2022) laid out some nice ideas, and Biden's Executive Order on Safe AI Development (Oct 2023) gave government agencies some homework.

Key points include:

Safety testing for powerful AI models.

Guidelines for responsible AI use.

Cracking down on algorithmic discrimination.

But without actual laws, most of this is just strongly worded recommendations. Meanwhile, states like California and New York are making their own rules, creating a patchwork approach that's about as organized as a teenager's bedroom.

China — Controlling Everything

China's AI regulation is fast, top-down, and very much about control. Their Interim Measures on Generative AI Services (effective Aug 2023) demand:

Censorship: AI must not generate content that questions the government.

Identity verification: Everyone using AI must verify their real identity.

Government registration: All AI products must be filed with authorities.

While they say it's about ethics, critics point out it looks a lot like ensuring AI helps with surveillance rather than freedom.

"China's AI regulations are less about ethics—and more about politics wrapped in code."

— Kendra Schaefer, Tech Policy Analyst, Trivium China

United Kingdom — Innovation First, Questions Later

The UK's approach is "pro-innovation regulation," which is a bit like saying "pro-delicious poison." Instead of comprehensive laws, they're letting different sectors figure it out themselves.

Key aspects:

No dedicated AI laws yet.

Investment in AI safety research.

Focus on principles over specific rules.

This creates flexibility—but also uncertainty about what's allowed.

India — Wait and See

India's AI policy is still developing. While the government promotes AI through programs like IndiaAI, there's no complete regulatory framework yet.

However:

The Digital Personal Data Protection Act (2023) addresses privacy issues.

Various committees are studying bias, misinformation, and job impacts.

India is balancing digital opportunity with the challenges of a diverse society.

Canada & Australia — Privacy First

Both countries are incorporating AI rules into existing data protection frameworks:

Canada's proposed Artificial Intelligence and Data Act targets high-impact systems.

Australia focuses on algorithmic accountability in government services.

Neither is rushing—but both are watching global developments closely.

The Real Ethics Challenge

The scariest ethical problems in AI won't be dramatic doomsday scenarios—they'll be the slow normalization of letting machines make human decisions.

It's not the killer robot uprising that should worry us. It's the mundane spreadsheet AI that quietly decides 50 people in a small town don't deserve insurance anymore. It's the content recommendation system that slowly radicalizes someone through a thousand tiny nudges. It's the gradual surrender of human judgment to algorithms.

These aren't future fears. They're happening right now. And they need solutions now.

A Thought Experiment

Let's end with a simple scenario:

You're building an AI companion for elderly people. It can detect emotions, remember conversations, and answer health questions. Then you notice something: lonely users are asking it to pretend to be their deceased spouses.

Do you allow this feature?

What's your responsibility as the creator?

Does your AI have any responsibilities?

Where should the line be drawn?

Final Thoughts: We're The Ones Pressing "Accept"

AI is basically a mirror reflecting our own values, biases, and choices—for better or worse.

If we build systems that amplify the worst parts of humanity, that's on us. But if we design with care, consideration, and actual ethical backbone, AI could be one of the most helpful tools we've ever created.

The future doesn't need perfect solutions. It needs better questions.

And it needs us to start asking them—loudly, persistently, and together.

Because the scariest thing about AI isn't that it might become too human.

It's that it might not be human enough in all the ways that matter.

If you like conversations like this, SUBSCRIBE to our Free newsletter for weekly updates on AI trends, Productivity tips and Ethical deep dives. We wish you a great day ahead (or night we don't really know lmao)